5 Quick Ways to Highlight Duplicates Between Columns

In the world of data analysis, identifying duplicate values between columns is a common yet crucial task. Whether you’re working with spreadsheets, databases, or data visualization tools, highlighting duplicates can help you uncover patterns, ensure data integrity, or detect errors. Here are five quick and efficient ways to highlight duplicates between columns, tailored to various tools and scenarios.

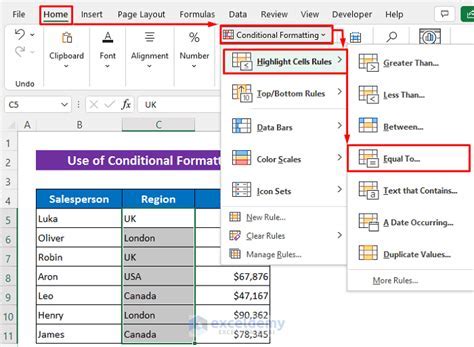

1. Using Conditional Formatting in Excel

Excel remains a go-to tool for many data professionals. Its Conditional Formatting feature makes it easy to highlight duplicates across columns.

Steps: 1. Select the range of cells in both columns you want to compare. 2. Go to Home > Conditional Formatting > Highlight Cells Rules > Duplicate Values. 3. Choose a formatting style (e.g., red fill with dark red text). 4. Click OK. Excel will highlight cells with duplicate values across the selected range.

Pro Tip: Use the Formula option under Conditional Formatting to create a custom rule. For example, =COUNTIF($B$2:$B$100, A2) > 1 to highlight duplicates between Column A and Column B.

2. Google Sheets: Custom Formulas and Conditional Formatting

Google Sheets offers similar functionality with added flexibility for cloud-based collaboration.

Steps:

1. Select the columns you want to compare.

2. Go to Format > Conditional Formatting.

3. In the Format rules panel, set the Format cells if dropdown to Custom formula is.

4. Enter a formula like =COUNTIF(B:B, A2) > 1 (assuming Column A and Column B are being compared).

5. Choose a formatting style and click Done.

Advanced Tip: Use ARRAYFORMULA to apply the formula across multiple columns without repeating it manually.

3. Python with Pandas: Programmatic Approach

For data analysts and programmers, Python’s Pandas library provides a powerful way to identify and highlight duplicates programmatically.

Example Code:

import pandas as pd

# Sample DataFrame

data = {'Column1': [1, 2, 3, 4, 5], 'Column2': [3, 4, 5, 6, 7]}

df = pd.DataFrame(data)

# Identify duplicates between columns

duplicates = df[df['Column1'].isin(df['Column2'])]

# Highlight duplicates (e.g., using a new column)

df['IsDuplicate'] = df['Column1'].isin(df['Column2'])

print(df)

Output:

Column1 Column2 IsDuplicate

0 1 3 False

1 2 4 False

2 3 5 True

3 4 6 True

4 5 7 True

4. SQL Queries for Database Duplicates

If your data resides in a database, SQL queries can efficiently identify duplicates between columns.

Example Query:

SELECT Column1, Column2

FROM YourTable

WHERE Column1 IN (SELECT Column2 FROM YourTable)

AND Column1 != Column2;

Explanation: This query returns rows where values in Column1 exist in Column2 but are not identical to themselves.

Pro Tip: Use GROUP BY and HAVING to count occurrences and filter duplicates more dynamically.

5. Power BI: Using DAX Measures

For business intelligence professionals, Power BI’s DAX language allows you to highlight duplicates visually.

Steps: 1. Load your data into Power BI. 2. Create a new measure using DAX:

Duplicate Count = COUNTROWS(FILTER(Table, Table[Column1] = Table[Column2]))

- Use this measure in a table or matrix visual to highlight duplicates.

Visualization Tip: Apply conditional formatting to the visual to color-code duplicates based on the Duplicate Count measure.

Can I highlight duplicates across more than two columns?

+Yes, most tools allow comparing multiple columns. In Excel, select all columns before applying Conditional Formatting. In Python, extend the logic to include additional columns. For SQL, modify the query to include more columns in the comparison.

How can I remove duplicates instead of just highlighting them?

+In Excel, use the "Remove Duplicates" feature under the Data tab. In Python, use `df.drop_duplicates()`. In SQL, use the `DISTINCT` keyword or `DELETE` with a `WHERE` clause.

Is it possible to highlight duplicates based on partial matches?

+Yes, use functions like `SEARCH` in Excel or `STRPOS` in SQL for partial matches. In Python, use `str.contains()` with Pandas.

By mastering these techniques, you’ll save time and improve the accuracy of your data analysis, regardless of the tool you’re using.